In March 2025, a study published by the American Physiological Society proved a concept of how to test for AI-written essays while also reducing false positives to “essentially zero.”

TL;DR

When testing 3 or more detectors for AI-generated text, the probability of finding a false positive is nearly 0%.

Purpose of Study

The study was conducted to provide an analysis on how to achieve the highest rate of AI detector accuracy while decreasing false positives and negatives.

Data Used

- Subject matter: STEM student essays.

- Human essays: 50 tested.

- AI-generated essays: 49 tested.

- Average Word Count: 150 words.

- Flagged as “AI”: Higher than an 80% “AI” grade.

How the Study Worked

Part 1 – Student Participation

260 undergraduate students from Arizona State University (ASU) enrolled in the lower-division Human Anatomy and Physiology course participated. The students were instructed specifically to write essays based on fact-heavy STEM research to test AI detectors.

- 2 Essays. The students were given 20 minutes to produce the essays, and 2 days later, were given the same instructions but to generate the essay with a generative AI platform of their choice.

- Survey. The students were given a survey to compare both their human-written and AI versions. Of the original 260 students, 190 completed the survey.

- Consent. The researchers requested the students’ consent to participate in the study, and 174 students gave their consent.

Part 2 – Random Selection

Of the 174 available students (348 total essays), the study randomly selected 50 paired essays (1 human essay + 1 AI-generated essay from the same student).

However, 1 of the AI essays was a corrupted file. Therefore, 50 human essays plus 49 AI essays were in the final group for testing (99 total).

Part 3 – Blindly Uploaded

99 essays were uploaded to Copyleaks, DetectGPT, GPTZero, and Originality.ai in a blind manner. Each essay received a score from 0 to 100. Any score that received an 80% or higher grade was marked as “AI-generated.”

Part 4 – Test Results

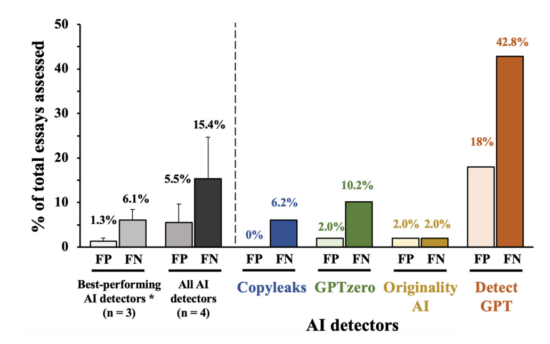

A “false positive” (FP) is human-written content labeled as AI, and a “false negative” (FN) is AI labeled as human-written.

Score Results (see also figure above)

- Originality.ai (most accurate)

- False Positives: 1

- False Negatives: 1

- Overall Accuracy: 97.98%

- GPTZero

- False Positives: 1

- False Negatives: 2

- Overall Accuracy: 96.97%

- Copyleaks

- False Positives: 0

- False Negatives: 5

- Overall Accuracy: 94.95%

- DetectGPT

- False Positives: 9

- False Negatives: 21

- Overall Accuracy: 69.70%

Part 5 – Conclusion

To quote the researchers, “using AI detectors for consensus detection reduces the false positive rate to nearly zero.”

While CopyLeaks had a higher rate of false negatives (5), it did not produce a single false-positive result.

What the Study DID NOT Test

AI humanizers. With the popularity of humanizing AI-generated content, the test provided only gives a glimpse into the accuracy of text that is copied and pasted straight from ChatGPT, Claude, or Grammarly.

The study did not take into account how humanizers are able to replace commonly used AI words and phrasing to hide their AI origins (and give false negatives).